IOT Edge File Storage

TLDR

As one of my longer posts, i think this warrants a TLDR.

I have coded a couple of IoT modules hosted in DockerHub, and have a IoT Edge manifest that simply needs your Storage Account connection string plugged in. You’re then only a couple of BASH commands away from a end to end example of storage module to module communication working on your Edge device. If you want to just grab the manifest and crack on, it’s here. Otherwise here’s my blog post… :)

Storage on the Edge

This post is all about implementing Storage on you IoT edge device. I’ll be starting from a position of already having a Linux VM, with the Azure IOT Edge Agent/Hub installed. It’s registered with an IOT Hub, and all set to receive deployed modules from VS Code on my development machine. If you need to get to this point, please look at this page in the Azure documentation: https://docs.microsoft.com/en-us/azure/iot-edge/quickstart-linux.

The two kinds of storage that i’ll be implementing are;

- Local host storage on the Edge

- An Azure Blob storage instance on the Edge that can synchronise to public cloud

To do this, I’ll code two custom modules in c#, but you can use any language (i’ve seperately written one in Python too). My custom modules are

- Simulated File Service

- Filehook service

I also will be deploying the Azure Blob Storage container to the edge.

These 3 modules and connected message process demonstrates an easily deployable end-to-end example interacting with the local file system and Azure Blob on the edge.

Simulated File Module

Testing out your code on the edge can be a pain. Direct Methods didn’t really help much, and I really need something thats communicating from inside the Edge environment to be as close to my production state as possible.

The Simulated File Service is the answer. It uses a 30s timer to write new files onto the filesystem, and then write an outbound message to IOTHub to then be served to my Filehook service for processing.

Filehook Module

The Filehook service will react to a message from IoT Hub that contains the information about the written file. It receives the event, access the written file and then uses the Azure Storage SDK to write the file to my local Azure Blob Storage account. It also raises an event, which could be sent to a brokered endpoint for another module to perform some other logic with the file, for example a Machine Learning image processor.

Local storage on the edge

Core to being able to leverage the local host file system is providing a keyvalue pair when the custom module container is created on the edge. This takes the form of a Bind, specified in the Deployment template/manifest.

"createOptions": {

"HostConfig": {

"Binds":["/tmp/filebeat/:/tmp/altdropdir/"]

}

}

This definition instructs the local host directory /tmp/filebeat/ to be mounted to /tmp/altdropdir inside the modules container. You’ll then usually use environment variables to tell your module code which directory to look in… but you could always hard-code it if you really wanted.

"env": {

"altInputFolder": {

"value": "/tmp/altdropdir/"

},

Azure Blob storage on the edge

You’re able to deploy an instance of Azure Blob storage as a container, which can be great for development environments - but in our case, great for having a consistent storage endpoint on the edge that can synchronise it’s files up to a cloud storage account.

Getting it deployed

As it’s available as a publicly hosted container, getting it running is super easy. We just need a little configuration to give our instance of Azure blob storage a name and an access key. We then use a bind to let the files be kept on the host, and we tell the Blob service which TCP port to listen on.

"azureblobstorageoniotedge": {

"version": "1.0",

"type": "docker",

"status": "running",

"restartPolicy": "always",

"settings": {

"image": "mcr.microsoft.com/azure-blob-storage:latest",

"createOptions": {

"Env":[

"LOCAL_STORAGE_ACCOUNT_NAME=iotedgedrama",

"LOCAL_STORAGE_ACCOUNT_KEY=itsNotReallyMyAccountKey=="

],

"HostConfig":{

"Binds": ["/srv/containerdata:/blobroot"],

"PortBindings":{

"11002/tcp": [{"HostPort":"11002"}]

}

}

}

}

}

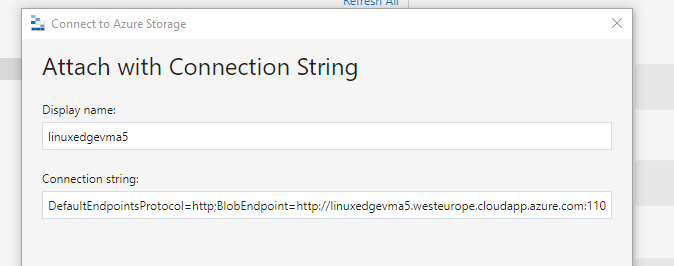

Connecting to the storage

Using Azure Storage Explorer is by far the quickest way to test out if the storage and synchronisation is working.

We simply treat it like any other Azure Storage account, although one that doesn’t have HTTPS or AzureAD authentication. We therefore use a specifically formatted HTTP connection string to reach the Storage Account from our local development machine. Remember to open port 11002 on your firewall/nsg if you’re using one.

DefaultEndpointsProtocol=http;BlobEndpoint=http://linuxedgevma5.westeurope.cloudapp.azure.com:11002/iotedgedrama;AccountName=iotedgedrama;AccountKey=iYMKd+VQXJDxNwGInLuzl9tA5oUlxTcZnIVhfcGoUnRkXgGl7CROA7fYjOvXd+qFulujnhqjsdtYZpfNHqAVPg==;

If we’re wanting to access the Azure Blob service from another module hosted on the same Edge Compute, then we tweak the endpoint to use the name of the AzBlob storage module, like so;

DefaultEndpointsProtocol=http;BlobEndpoint=http://azureblobstorageoniotedge:11002/iotedgedrama;AccountName=iotedgedrama;AccountKey=iYMKd+VQXJDxNwGInLuzl9tA5oUlxTcZnIVhfcGoUnRkXgGl7CROA7fYjOvXd+qFulujnhqjsdtYZpfNHqAVPg==;"

Implicit file creation doesn’t work

Although it leverages the host file system, creating files directly in the host directory doesn’t result in those files being accepted into Azure Blob Storage. You need to add files properly using the Azure Storage SDK. https://www.nuget.org/packages/Azure.Storage.Blobs

Pulling the example all together

My example uses 3 compute modules

- AzureBlobStorageOnIotEdge - An instance of Azure Blob storage running on the Edge. It will copy any files written to it’s “filestoazure” container to a Cloud Azure Storage Account

- FsToucher - A c# module that ever 30 seconds writes a new file down to the host mapped filesystem and then raises a FileEvent message onto the IoT hub

- FileCopyModule - This is a c# module that reacts to the previously described FileEvent, it copies the file to the Azure blob storage service running on the Edge.

To get this running in your environment you’ll need to have these prerequisites;

- Created an IoT Hub in Azure

- Deployed a LinuxVM, and registered it as an IoT Edge device with the IoT hub

- Visual Studio Code on your development machine with the IoT tools extension

Once you have the pre-req’s;

- Create an Azure Storage account in your Azure Subscription that you’ll want to replicate files to from the edge. Create a blob container in the storage account called iotdestinationup. Make note of the ConnectionString for your Storage Account in the Access Keys section of the Storage Account in the Azure Portal.

- Clone my repository (or just grab the 1 file you need!), and open the folder in VS Code.

- Set the value of cloudStorageConnectionString at the bottom of the deployment.template.json file to the one from your Storage Account

- Save, and right click on the deployment.template.json file, select to Generate IoT Hub deployment manifest.

- In the Config folder, locate your deployment.amd64.json file that was just created and right click select to Deploy to a Single Device.

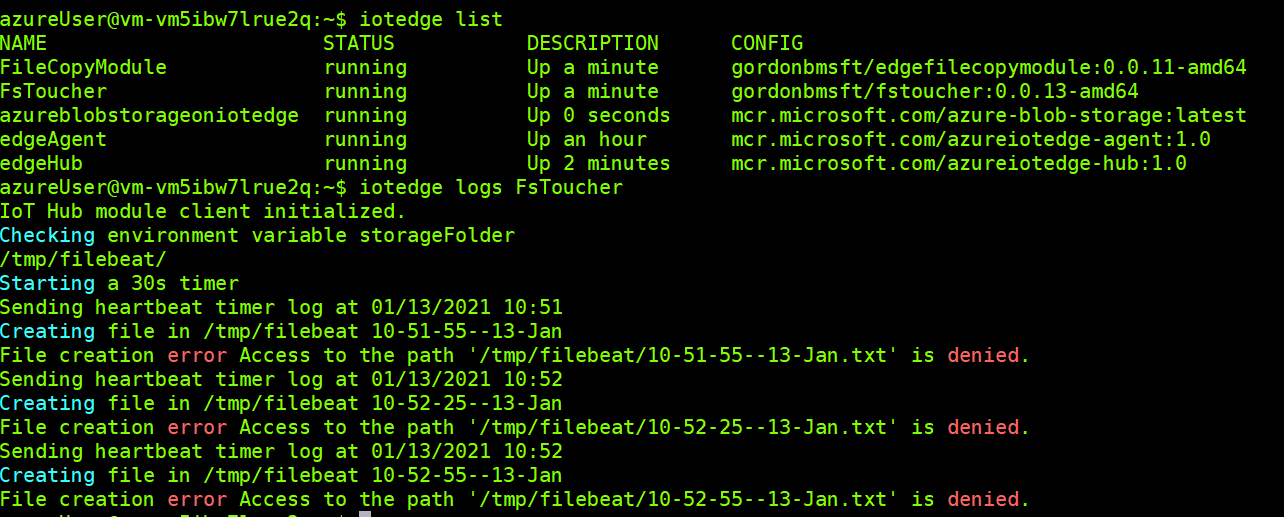

- SSH onto your Linux VM thats acting as the Edge compute, and run the command iotedge list which will show you the status of the deployed modules. Run the command iotedge logs FsToucher to view the logs from the first code module to ensure that the files are being created and written down to the host filesystem.

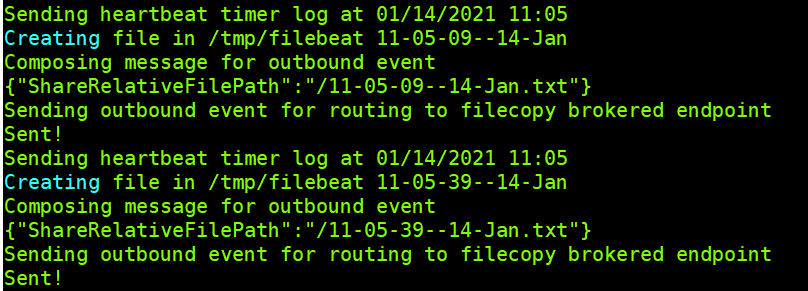

At this point, i’d expect you to see something like this.

We have an FileSystem access problem. Our custom module doesn’t have permission to create a file on the host. To enable this i’ll change the permissions for the folder.

We have an FileSystem access problem. Our custom module doesn’t have permission to create a file on the host. To enable this i’ll change the permissions for the folder.

sudo chmod -R 777 /tmp/filebeat/

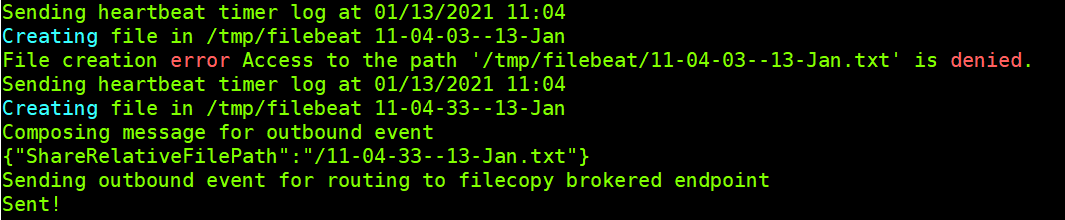

Then we should find that the timer works successfully.

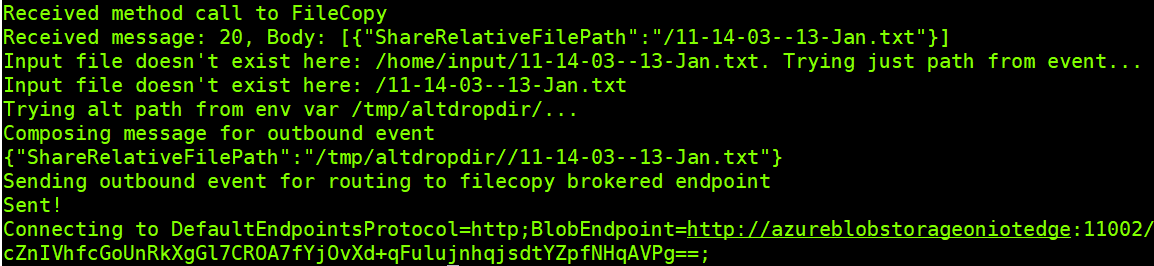

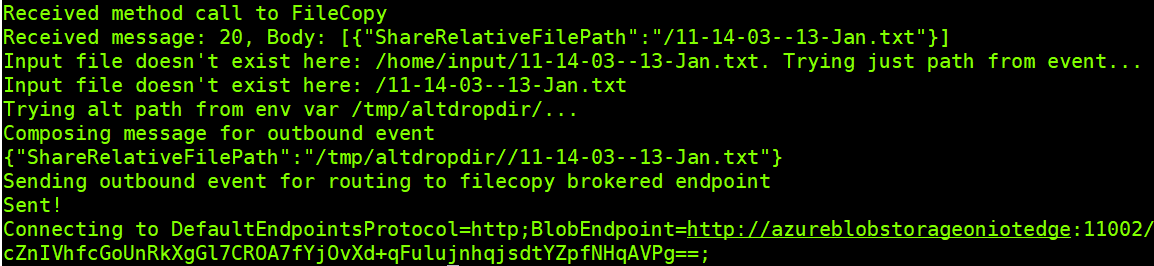

Now that the Simulated writer is doing its thing, we should check the logs for the FileCopy module

We can see however that it then has an exception connecting to the Blob Storage endpoint;

We can see however that it then has an exception connecting to the Blob Storage endpoint;

Caught exception: Retry failed after 6 tries. (Name or service not known) (Name or service not known) (Name or service not known) (Name or service not known) (Name or service not known) (Name or service not known)

If we interrogate the logs for Azure Blob, we’ll see an error like this;

Starting Azure Blob Storage on IoT Edge, version 1.4.0.0.

[2021-01-13 11:17:20.393] [error ] [tid 1] [DataStore.cc:56] [Initialize] mkdir failed with error:Permission denied

Another permissions error to deal with, which if you read the documentation on deploying Azure Blob Storage on the edge - you’ll see can be resolved with;

sudo mkdir /srv/containerdata

sudo chown -R 11000:11000 /srv/containerdata

sudo chmod -R 700 /srv/containerdata

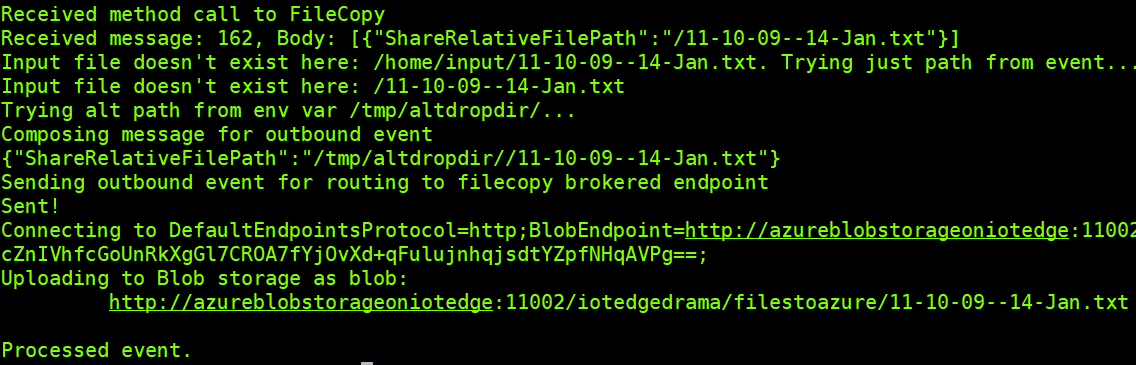

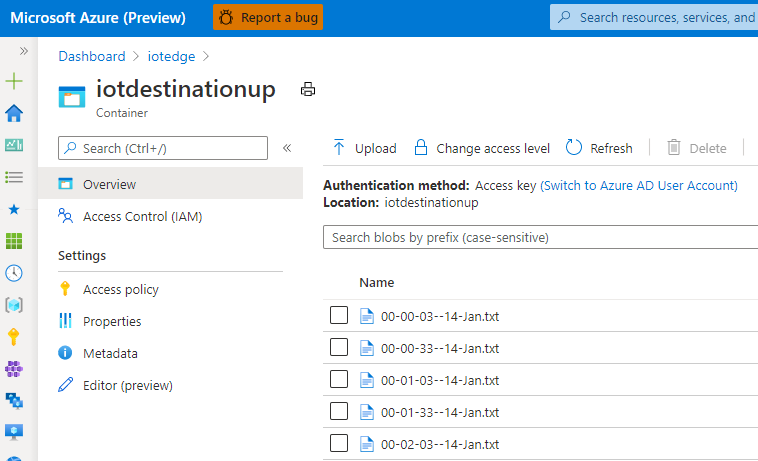

With these changes made, the end to end storage process is complete. You should see logs resembling these, and by logging into the Azure Portal you’ll find your Cloud Storage Account has received the edge generated file.

azureUser@vm-vm5ibw7lrue2q:~$ iotedge list

NAME STATUS DESCRIPTION CONFIG

FsToucher running Up 0 seconds gordonbmsft/fstoucher:0.0.13-amd64

edgeAgent running Up a day mcr.microsoft.com/azureiotedge-agent:1.0

edgeHub running Up a day mcr.microsoft.com/azureiotedge-hub:1.0

azureUser@vm-vm5ibw7lrue2q:~$ iotedge logs FsToucher

IoT Hub module client initialized.

Checking environment variable storageFolder

/tmp/filebeat/

Starting a 30s timer

Sending heartbeat timer log at 01/14/2021 09:49

Creating file in /tmp/filebeat 09-49-39--14-Jan

Composing message for outbound event

{"ShareRelativeFilePath":"/09-49-39--14-Jan.txt"}

Sending outbound event for routing to filecopy brokered endpoint

Sent!

azureUser@vm-vm5ibw7lrue2q:~$ iotedge list

NAME STATUS DESCRIPTION CONFIG

FileCopyModule running Up 36 seconds gordonbmsft/edgefilecopymodule:0.0.11-amd64

FsToucher running Up 39 seconds gordonbmsft/fstoucher:0.0.13-amd64

azureblobstorageoniotedge running Up 34 seconds mcr.microsoft.com/azure-blob-storage:latest

edgeAgent running Up a day mcr.microsoft.com/azureiotedge-agent:1.0

edgeHub running Up a day mcr.microsoft.com/azureiotedge-hub:1.0

azureUser@vm-vm5ibw7lrue2q:~$ iotedge logs FileCopyModule

C# FileCopy Module started.

Checking environment variable altInputFolder

/tmp/altdropdir/

Received method call to FileCopy

Received message: 1, Body: [{"ShareRelativeFilePath":"/09-49-39--14-Jan.txt"}]

Input file doesn't exist here: /home/input/09-49-39--14-Jan.txt. Trying just path from event...

Input file doesn't exist here: /09-49-39--14-Jan.txt

Trying alt path from env var /tmp/altdropdir/...

Composing message for outbound event

{"ShareRelativeFilePath":"/tmp/altdropdir//09-49-39--14-Jan.txt"}

Sending outbound event for routing to filecopy brokered endpoint

Sent!

Connecting to DefaultEndpointsProtocol=http;BlobEndpoint=http://azureblobstorageoniotedge:11002/iotedgedrama;AccountName=iotedgedrama;AccountKey=iYMKd+VQXJDxNwGInLuzl9tA5oUlxTcZnIVhfcGoUnRkXgGl7CROA7fYjOvXd+qFulujnhqjsdtYZpfNHqAVPg==;

Uploading to Blob storage as blob:

http://azureblobstorageoniotedge:11002/iotedgedrama/filestoazure/09-49-39--14-Jan.txt

Processed event.

azureUser@vm-vm5ibw7lrue2q:~$ iotedge logs azureblobstorageoniotedge | grep 'PutBlob completed'

[2021-01-14 09:49:40.306] [info ] [tid 14] [BlobInterface.cc:209] [PutBlob] PutBlob completed. Container:filestoazure Blob:09-49-39--14-Jan.txt

We can then check the Azure Portal for the files

Adapting this for Azure Stack Edge Pro devices

The two key areas where Azure Stack Edge is different from a Linux VM as the edge device are;

- File System. Interacting with the host filesystem is governed through the Storage Gateway. When you create a File Share on the Stack Edge Storage Gateway, you need to select “Use the share with Edge compute” option. This will then provide you with a Local mount point for Edge compute modules. It’s this path that we’ll use in the binds defined in the deployment.template.json file. Because the Storage Gateway controls the access permissions to the share, you trade worrying about ssh and chmod for other problems :)

- Logging. Getting the logs for the deployed modules (which are running as containers) needs to be done through the Kubernetes Dashboard. You can find a description on how to do this here: https://docs.microsoft.com/en-us/azure/databox-online/azure-stack-edge-gpu-monitor-kubernetes-dashboard#view-container-logs

Troubleshooting

Azure Storage Explorer

There is an error you can receive from the Azure Storage Explorer. “Unable to retrieve child resources. Details: { “name”: “RestError”, “message”: “The value for one of the HTTP headers is not in the correct format” }

The solution is to revert to an older build. I usually use Choco for package management (and here’s the correct build to install : https://chocolatey.org/packages/microsoftazurestorageexplorer/1.14.0, however there did seem to be an issue when only the newer 1.17 build is downloaded. You can therefore get hold of the correct build here: https://github.com/microsoft/AzureStorageExplorer/releases/tag/v1.14.0

C# Azure Storage SDK

The same issue that Azure Storage Explorer has, is present in the Azure Storage SDK. At the time of writing, the 12.7.0 version is the latest, but that results in the following error

HeaderName: x-ms-version HeaderValue: 2020-02-10 ExceptionDetails: The value 2020-02-10 provided for request header x-ms-version is invalid.Microsoft.Cis.Services.Nephos.Common.Protocols.Rest.InvalidHeaderProtocolException: The value 2020-02-10 provided for request header x-ms-version is invalid.

If you revert to an older SDK version in your code, then it’ll work just fine. Here’s the version i chose; <PackageReference Include=”Azure.Storage.Blobs” Version=”12.4.2” />