Scheduled json post in linux using cron and du – to Logic Apps and PowerBi

This post digs into getting regular disk space data out of linux, sending to an Azure Logic App for submission into PowerBi for visualisation.

So in my last post, i set up an rsync server to take a load of files from my “on-premises” storage. I have a reasonable internet connection, but am impatient and was always checking progress by logging into my rsync server and checking directory size using the du command.

du -h --max-depth=1

-h gives you a human readable disk size, and max-depth only reports on the top level directories

du output to json

So the first step is take the output that i like, and get it into a better format.

Here’s what i came up with, it’s a little hacky as to create valid json i have an empty object at the end of the array to avoid messing around with last-comma removal.

RSYNCDIRDU=$(du -c --time /datadrive/rntwo/ --max-depth=1 | sed '1 i\

{"rsyncdirs": [

s/\([^ \t]*\) *\t\([^ ]*\s[^ \t]*\) *\t\([^\n]*\)/ {\

"size" : "\1",\

"modified": "\2",\

"path": "\3"},/

$ a\{}]}')

This outputs valid json, which i can then curl out to a waiting Logic App, Power Bi dataset, or just a web service.

{

"rsyncdirs": [

{

"size": "408G",

"modified": "2018-01-11 19:17",

"path": "/datadrive/rntwo/mydatadir"

},

{

"size": "4.0K",

"modified": "2018-01-09 14:15",

"path": "/datadrive/rntwo/anotherdatadir"

},

{

"size": "699G",

"modified": "2018-01-13 09:27",

"path": "/datadrive/rntwo/"

},

{

"size": "699G",

"modified": "2018-01-13 09:27",

"path": "total"

},

{}

]

}

Logic App

So i chose to post the data to an Azure Logic App. This way i can take advantage of it’s pre-built connectors for email/power-bi whenever i change my mind about what to do with the data.

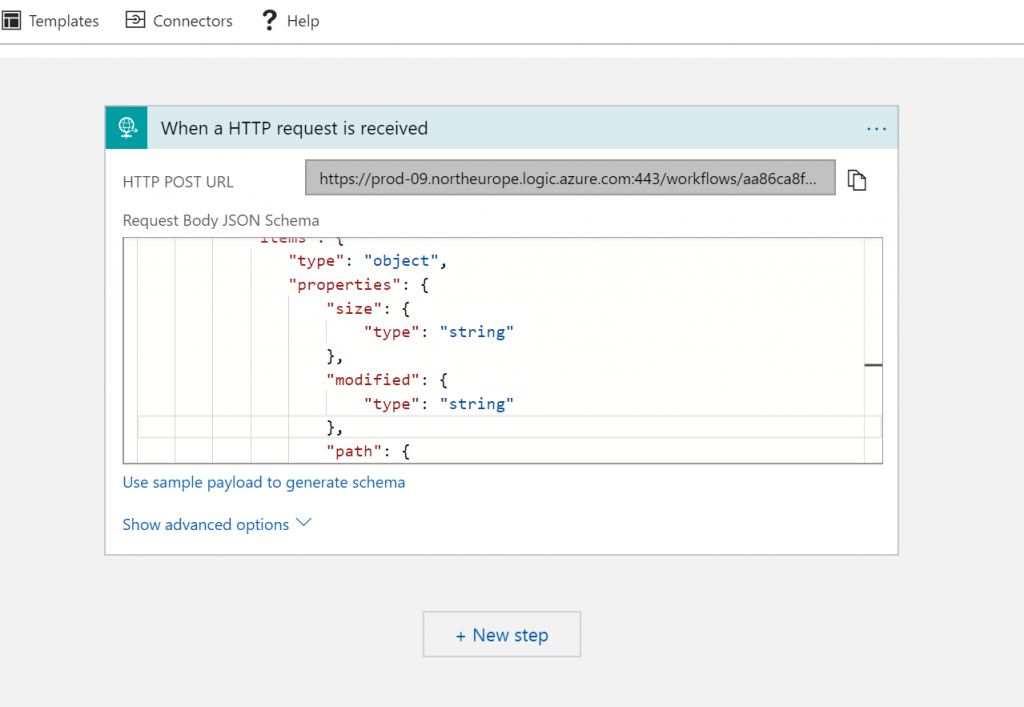

I new up a Logic App in Azure, choosing a Http Request/Response trigger template, pasting in the json – it creates the schema for me and i’m ready to go.

Curling the json

So now that i’ve got a URL from Logic Apps to post to i can create the curl command on my linux box.

curl -i \ -H "Accept: application/json" \ -H "Content-Type:application/json" \ -X POST --data "$RSYNCDIRDU" "https://the-url-to-my-logic-app"

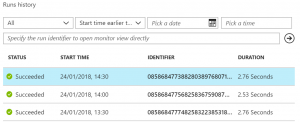

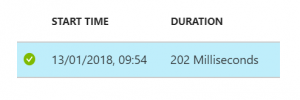

Lets see if that worked by checking the Logic App run history

Scheduling the curl

Ok, so all works as planned. Lets get this reporting the data every hour.

First lets put the code in a shell script file

#Get the json data into a variable from du

RSYNCDIRDU=$(du -c --time /datadrive/rntwo/ --max-depth=1 | sed '1 i\

{"rsyncdirs": [

s/\([^ \t]*\) *\t\([^ ]*\s[^ \t]*\) *\t\([^\n]*\)/ {\

"size" : "\1",\

"modified": "\2",\

"path": "\3"},/

$ a\{}]}')

#call the logic app

curl -i \

-H "Accept: application/json" \

-H "Content-Type:application/json" \

-X POST --data "$RSYNCDIRDU" "https://the-url-to-my-logic-app"

Then lets schedule it for every 30 minutes.

crontab <<'EOF' SHELL=/bin/bash */30 * * * * /datadrive/rntwo/cron-du-report.sh EOF

Logic App run history, oh good it’s working every 30mins 🙂

Developing the Logic App

So up until this point the Logic App contains 1 action, a trigger to receive the data which it does nothing with.

Lets send the data over to PowerBi so i can quickly check it from my mobile app when I’m interested.

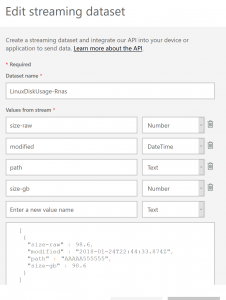

First up, head to PowerBi, click on a workspace and then add a dataset. I’m going to use a streaming dataset, with the API model.

You provide the field names and that’s it.

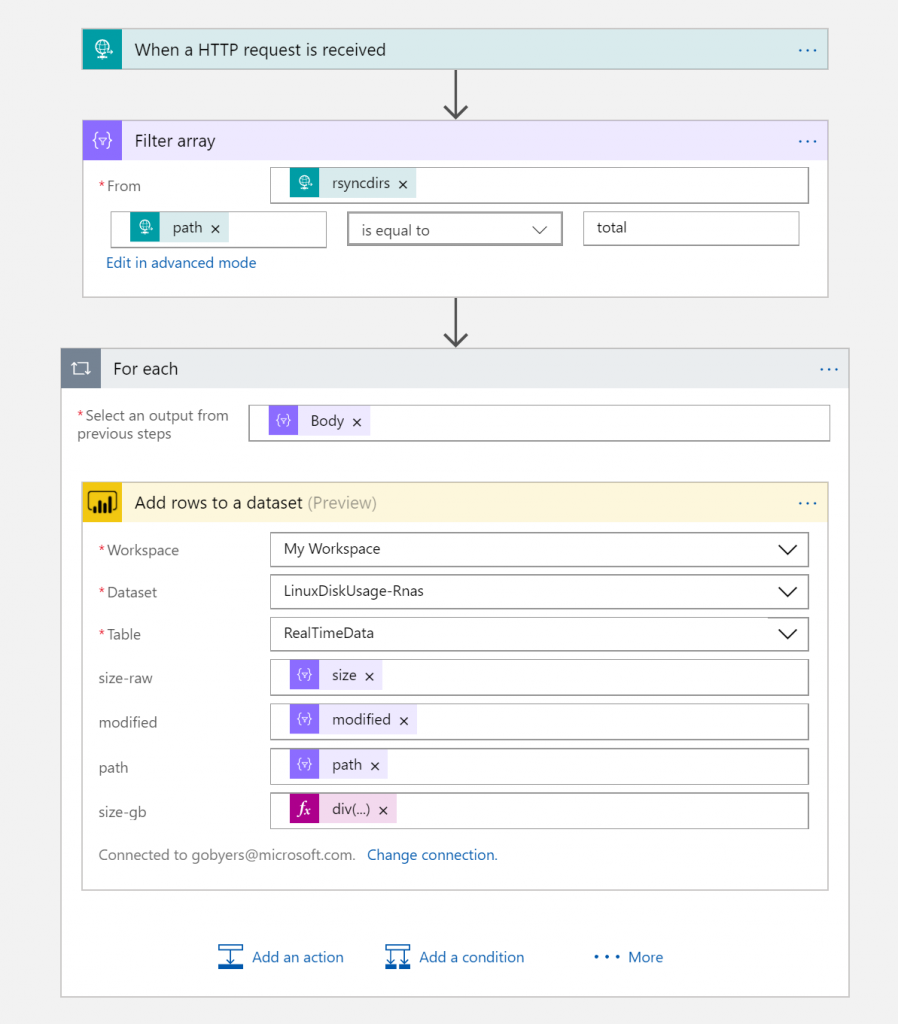

Next, we add add a few more actions to the Logic App.

– Filter Array (so we’re just working with the total size item)

– Add rows to a Powerbi dataset

– Use a simple calculation to work out the GB size from the KB size provided in the request

"body": {

"modified": "@items('For_each')?['modified']",

"path": "@items('For_each')?['path']",

"size-gb": "@div(div(float(items('For_each')?['size']),1024),1024)",

"size-raw": "@{items('For_each')?['size']}"

},

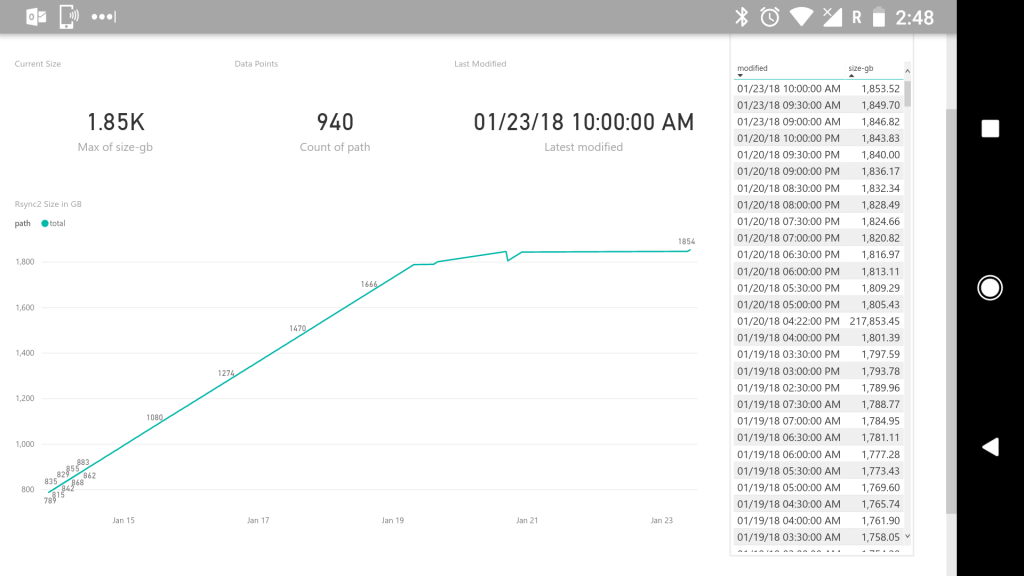

PowerBi Reports

So, once we have data going into a PowerBi hybrid streaming dataset we can craft some reports.

Data over time