Azure AI Text to Speech

Synthesised Speech is a really neat capability to play with, I’ve always had a soft spot of TTS capabilities ever since playing with “say” on my Amiga 1200. In this blog I’m specifically using the Azure AI speech service suite, but the markup language (SSML) is not exclusive to Microsoft - it’s used by other cloud providers like Google and AWS too.

Speech Studio

Microsoft’s AI API’s also have the capability for Digital Avatars to be rendered reading with the synthesised voice track. The best introduction to this is in the Speech Studio portal; https://speech.microsoft.com which wraps the API’s nicely. This allows you to efficiently browse through the gallery of Digital Avatars, and voices - finding the right one for you.

I’d suggest starting with the speech first, using the Speed Studio TTS. This allows a much faster developer inner-loop where you can get the sentence structure correct, tweak pronunciation and voice attributes like speed and pitch all using the UI in the portal. You can easily switch to SSML mode and grab the code for your voice script. Now you’ll move to the Digital Avatar, section of Speech Studio and add gestures and a face to the voice.

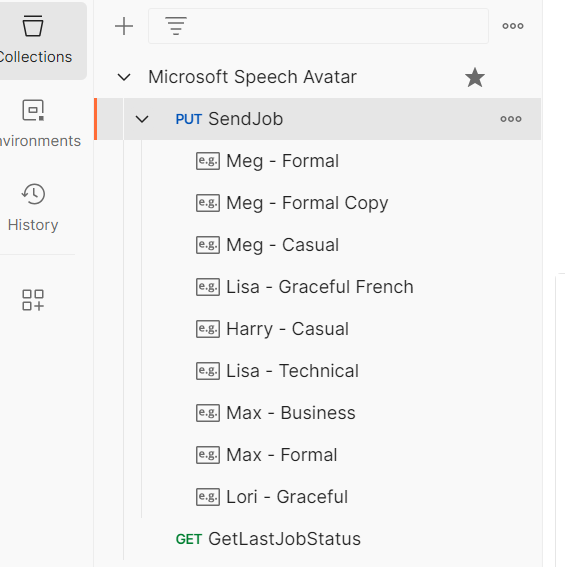

Postman Collection

Once you become more familiar with SSML and the avatar gestures, i’d then suggest dropping Speech Studio because of the lengthy, synchronous render times of the video. You can leverage a Postman collection that i’ve written with your API key to quickly send the jobs and grab the mp4 output.

Declaring sentences

SSML is actually really simple with parsing text, it interprets the full stop as the end of a sentence and automatically pauses and reads the following sentence.

In some of my code snippets below, i do however explicitly declare the sentence boundary. Generally because my audio clips are quite small and there’s not any overhead on the <s> wrapper - and I’m actually a bit geeky and prefer this.

Gestures

Timing of the delays can be a little tricky, you’ll need to resort to trial and error with the avatar renders to get it just right. For many of my short video clips, i declare the gesture immediately and by the time the second sentence is spoken the gesture is injected.

<bookmark mark='gesture.number-one'/><s>First sentance text</s><Sentance that corresponds to the gesture</s>

Delaying the audio

I had quite a few problems with the audio starting immediately and losing the first part of the word in the video render. To combat this i found declaring an additional sentence at the beginning of the narrative.

<mstts:silence type=\"semicolon-exact\" value=\"250ms\" /><s>;</s><s>First sentance</s>

Pronunciation

There are often words that the default synthesis needs some help with, acronyms are particularly problematic. It’s relatively easy to fix this though, just create a phonetic alias and wrap the problematic word in it like this;

<sub alias="ess ess emm ell">SSML </sub>